Tag: learning

-

The Inclusivity of Empathy in Group Activities

Reading Time: 4 minutesI love to hike fast. I also love to walk fast. When I am hiking I love to hike my own hike, and then wait for people at regular intervals, so that they may catch up, rest a little, and then continue. When cycling with some groups I will be left alone…

-

Swisscom MyAI as tutor

Reading Time: 2 minutesYesterday I asked MyAI by Swisscom, which is still in Beta, if it could help me write a JavaScript app to generate passwords and it did, with ease. It provided me with the javascript code I needed so I could cut and paste it, and then use node to run it straight…

-

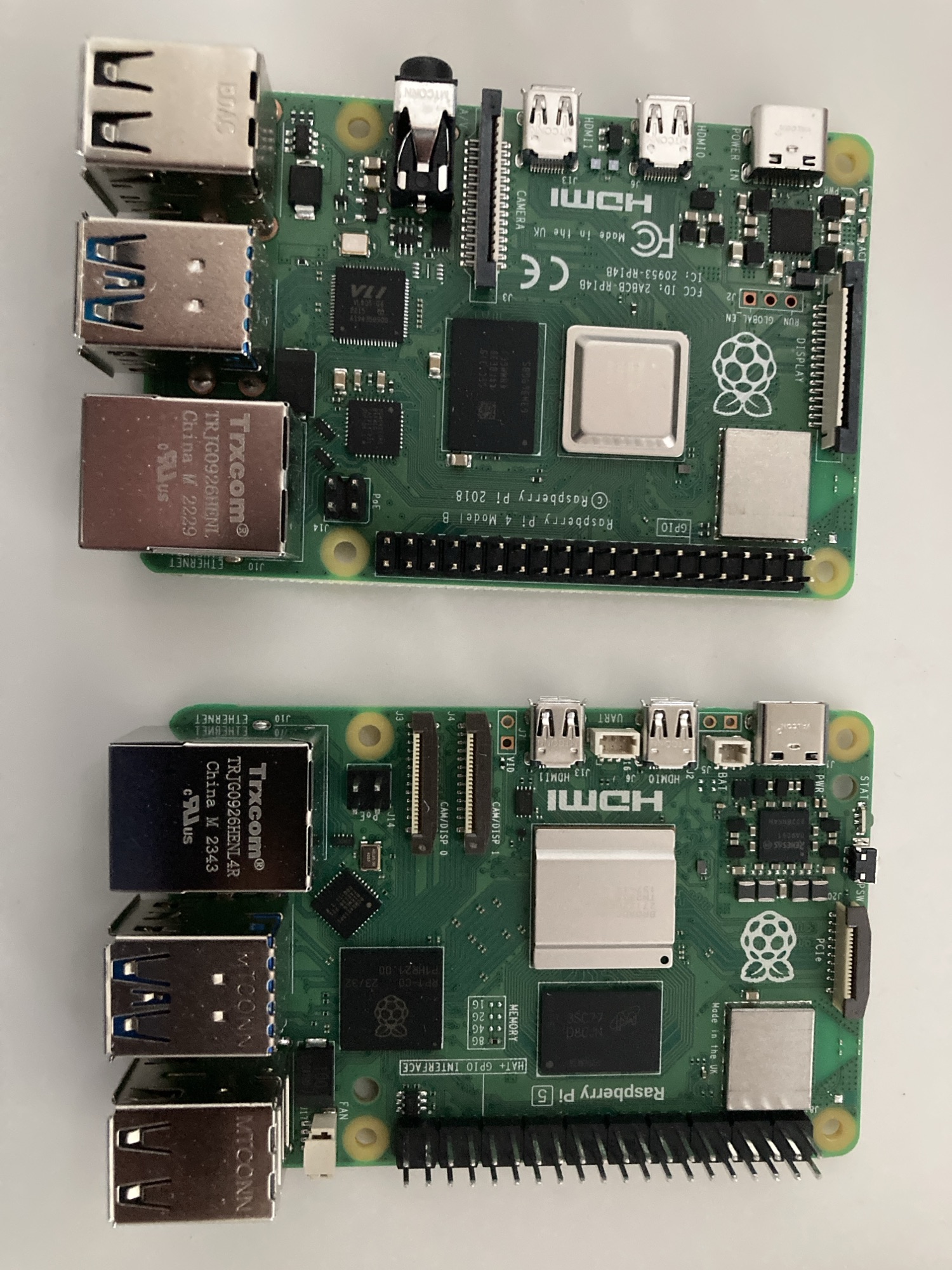

A Move from Self-Hosting on a Pi5 8gb to a Pi5 4gb

Reading Time: 2 minutesYesterday I started moving from a Pi5 8gb to a Pi5 4gb to self-host Audiobookshelf, Photoprism and Immich. I want to move from Ubuntu Desktop to ubuntu server to lower the head room required. When I checked I was using 3.9gb out of 8gb of ram. On the Pi5 4gb running the…