Category: Swiss walks

-

Cycling in a Strong Wind

Reading Time: 3 minutesDuring the Windmill hike from Le Day to Ste Croix one person was looking at the temperature for next week. He said it would be seventeen degrees, and then eighteen, and then nineteen degrees. That’s part of the reason for which I decided to go for a bike ride yesterday. That decision…

-

Switching from Swisscom To Galaxus

Reading Time: 3 minutesThis morning the transition from Swisscom to Galaxus was completed. I moved from Swisscom to Galaxus because it’s up to 50 CHF cheaper. Whereas I was paying about 70 CHF with Swisscom I now pay 19 CHF per month when I am within Switzerland, and I can pay 39 CHF per month…

-

Le Chemin Des Moulins

Reading Time: 2 minutesYesterday I went for a hike from Le Day down to the Saut du Day before going up towards the Aiguilles de Baume and beyond. In the process I saw six or more wind mills. The hike is 21.71km long, took about 6hr41 1178m of ascent and 895m of descent.Moving time was…

-

Walking from one Valley to Another

Reading Time: 2 minutesSometimes when we go for a hike we walk along a route that makes getting back to the car quick and easy. For tomorrow’s walking getting back to the car would take three trains and more than an hour. For Londoners this is a familiar routing situation, but for people in Switzerland…

-

Swisscom MyAI as tutor

Reading Time: 2 minutesYesterday I asked MyAI by Swisscom, which is still in Beta, if it could help me write a JavaScript app to generate passwords and it did, with ease. It provided me with the javascript code I needed so I could cut and paste it, and then use node to run it straight…

-

Playing with MyAI by Swisscom

Reading Time: < 1 minuteIf we use Claude, Gemini, ChatGPT or a few other AI models we are using AI that has data centres in the US. If we use Le Chat by Mistral or MyAI (Beta) by Swisscom we are using AI that is based in Europe, or Switzerland. The data stays here. The…

-

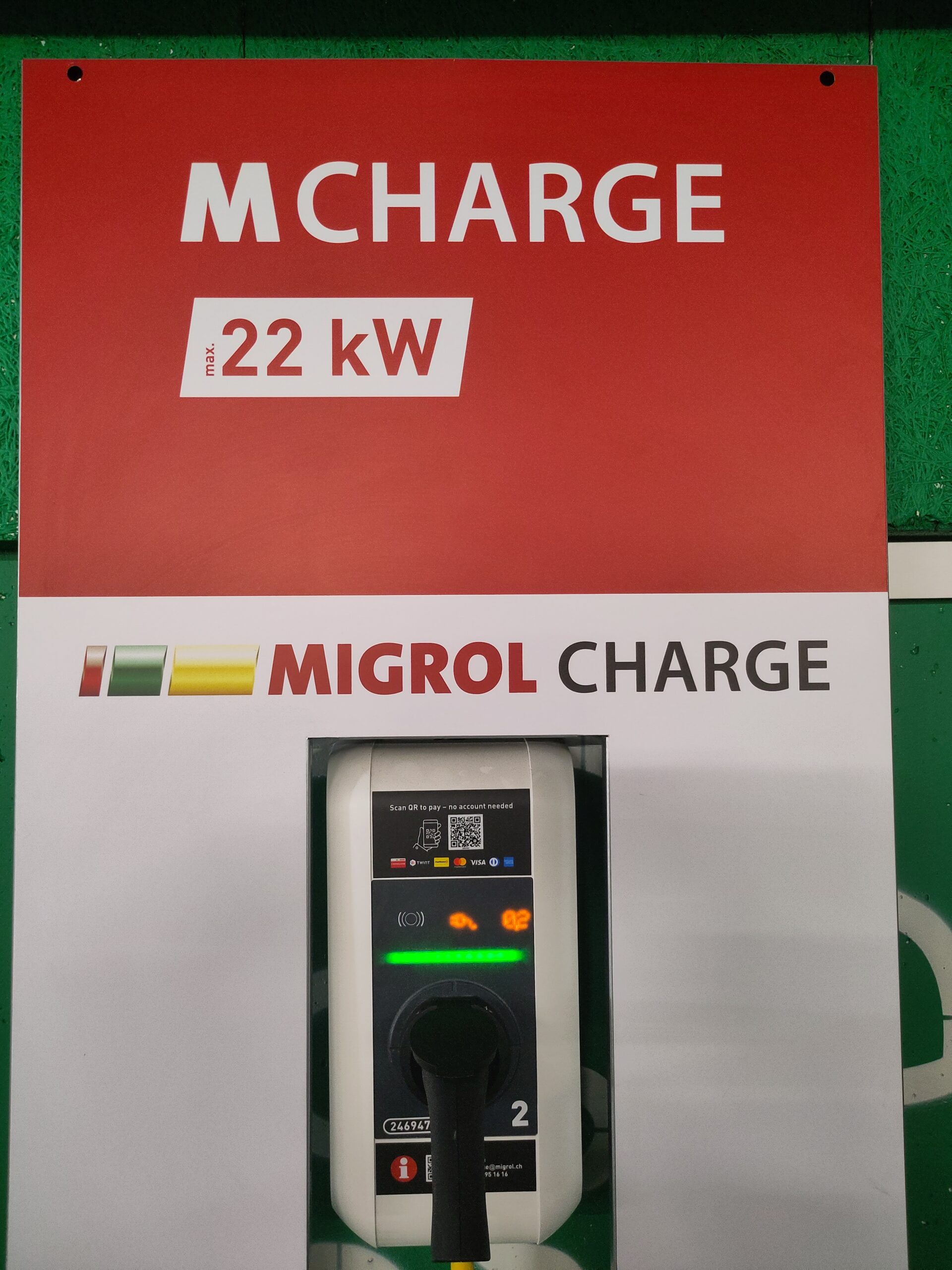

Charging and Shopping

Reading Time: 2 minutesI know of two chargers that I can use when shopping. One of these costs 2 CHF just to connect, and then the cost of the charge. At the second shopping centre I can plug in for five minutes, or two and a half hours and I will only pay for the…

-

The Selfish Habit of Yelling on Phones

Reading Time: 2 minutesWhen some people are away it feels pleasurable. The reason for which it feels pleasant is that they are loud. They will use the phone and have a full conversation when a three sentence e-mail will be enough. They will posture and show off rather than consider that not everyone in the…

-

Reading While Charging

Reading Time: 2 minutesToday I went to charge the car. I took this opportunity to read. Some people may think "I’m going for a walk" or "I’m going shopping" or something else. When you charge a car you have an hour or two without distractions in which to spend time reading a book. You could…